Task Results

Task overview: [Slides] [Presentation video]

Participant results: [Playlist of all presentation videos]

Task Description

The C@merata task is a combination of natural language processing (NLP) and music information retrieval (MIR). The inputs are a short natural language phrase referring to a musical feature (e.g. 'F# in the violin' ) and a music score in MusicXML. The required output is a list of passages in the score that match the query.

In the example, 'F# in the violin', we are looking for a single note of unspecified duration which is being played by a violin. A passage specifies the start measure (bar) and the exact beat in that bar where the note commences; it also specifies the end measure and beat. If the very first note in the violin part was an F# and it was a half note (minim), the time signature was 4/4 and we were instructed to provide an answer measuring in quarter notes (i.e. divisions=1) then a correct answer passage would be [4/4,1,1:1-1:2].

We are interested in this task because of the connection between musicological texts and music scores. Texts frequently make reference to musical passages e.g. Anthony Hopkins on Beethoven: 'giant unison G from the entire orchestra' or Deryck Cooke on Bruckner: 'themes based on falling octaves'. We would like to know the passages these are referring to, for musicological and pedagogical reasons (see [5] for detailed discussion).

The task has run for three years (2014, 2015, 2016 [3,4,6]) and each year there have been twenty scores with ten questions asked about each, 200 questions in all. Note that it is not necessary to have participated in C@merata in the past to be successful in 2017: the task actively welcomes new participants.

The C@merata task developed from earlier work where we were asking multiple-choice questions about texts, one third of which were about music and were derived with permission from sources such as Groves Dictionary of Music and Musicians, Gutenberg, 1911 Encyclopedia and Wikipedia [1,2].

The scores are all in MusicXML, which is a standard format for capturing almost all aspects of a complex score (for example a Beethoven symphony). A MusicXML file can be parsed using a tool such as music21, whereupon any information you require can readily be extracted for indexing or matching. Evaluation of results is via versions of Precision and Recall relative to a Gold Standard which we produce each year.

Over the last three years, the task has developed. In the first year, queries were mostly very simple ('G5', 'dotted quarter note') though a few were more complex ('perfect cadence', 'polyphony'). In 2015, we classified the simplest queries as 1_melod and added n_melod (twenty semiquavers, five note melody in bars 1-10) as well as 1_harm ('chord of D minor in measures 109-110'), follow ('quavers F4 E4 in the oboe followed by quavers E2 G#2 in the bass clef') and synch ('crotchet D3 on the word “je” against a minim D2'). Then, in 2016, we added n_harm ('A5 pedal in bars 116-138', 'alternating fourths and fifths in the Oboe in bars 1-100'); queries were generally more complicated and some were deliberately vague ('five-note melody in the cello in measures 20-28'). Scores have become more complicated as well. In 2014, most scores had two or three staves, and the maximum was five. In 2015 there were several with six to eight staves and one with nineteen; in 2016 there were several with between ten and eighteen staves.

In 2016, some queries were derived from real-world sources such as music theory exam papers, musicological textbooks and webpages, and Grove’s Dictionary of Music and Musicians (Grove Online, with permission) we intend to continue that trend this year; we also propose to facilitate participation by presenting the task in two parts:

1. Analysis of natural language input, leading to a structured representation of the query.

2. Retrieval from an MIR system based on the structured representation.

Participants will then be able to choose from two levels of participation:

1. Building an end-to-end system to answer the queries, as was done at C@merata 2014, 2015, 2016.

2. Using the structured representations as a starting point and themselves building an MIR system to search the scores.

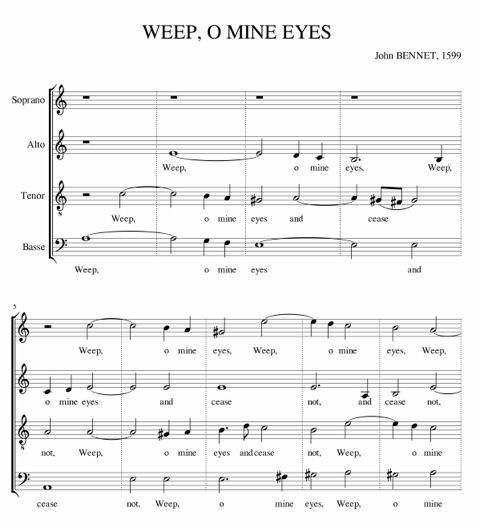

In order to gain more insight into the task, please see these examples from C@merata 2016 related to the score below:

Target group

This task will suit computer scientists who have a good knowledge of Western classical music or musicians or musicologists who have a good knowledge of programming. In particular, if you are a Python programmer we have tools which can help you get started. We would also welcome interested musicologists who could suggest questions and scores, or help to prepare answers.

Data

Queries and scores are provided by the task.

Ground truth and evaluation

To evaluate systems we define an answer to be Beat Correct if it starts and ends in the correct bars and at the correct beat in each bar. On the other hand an answer is Measure Correct if it starts and ends in the correct bars, not necessarily at the correct beats. We then define Beat Precision and Beat Recall in terms of Beat Correct passages, and similarly Measure Precision and Measure Recall. Evaluation is based on a Gold Standard produced by the organisers.

Recommended reading

[1] Peñas, A., Hovy, E., Forner, P., Rodrigo, A., Sutcliffe, R., Sporleder, C., Forascu, C., Benajiba, Y., Osenova, P. (2012). Overview of QA4MRE at CLEF 2012: Question Answering for Machine Reading Evaluation. Proceedings of QA4MRE-2012. Held as part of CLEF 2012.

[2] Sutcliffe, R., Peñas, A., Hovy, E., Forner, P., Rodrigo, A., Forascu, C., Benajiba, Y., Osenova, P. (2013). Overview of QA4MRE Main Task at CLEF 2013. Online Working Notes, CLEF 2013 Evaluation Labs and Workshop, 23-26 September 2013, Valencia, Spain.

[3] Sutcliffe, R. F. E., Crawford, T., Fox, C., Root, D. L., & Hovy, E. (2014). Shared Evaluation of Natural Language Queries against Classical Music Scores: A Full Description of the C@merata 2014 Task. Proceedings of the C@merata Task at MediaEval 2014.

[4] Sutcliffe, R. F. E., Fox, C., Root, D. L., Hovy, E., & Lewis, R. (2015). Shared Evaluation of Natural Language Queries against Classical Music Scores: A Full Description of the C@merata 2015 Task. Proceedings of the C@merata Task at MediaEval 2015.

[5] Sutcliffe, R. F. E., Crawford, T., Fox, C., Root, D. L., Hovy, E., & Lewis, R. (2015). Relating Natural Language Text to Musical Passages. Proceedings of the 16th International Society for Music Information Retrieval Conference, Malaga, Spain, 26-30 October, 2015.

[6] Sutcliffe, R. F. E., Collins, T., Hovy, E., Lewis, R., Fox, C., Root, D. L. (2016). The C@merata task at MediaEval 2016: Natural Language Queries Derived from Exam Papers, Articles and Other Sources against Classical Music Scores in MusicXML. Proceedings of the MediaEval 2016 Workshop, Hilversum, The Netherlands, October 20-21, 2016.

Contact person

Richard Sutcliffe, University of Essex, UK rsutcl at essex.ac.uk