The MediaEval 2017 Workshop took place at Trinity College Dublin 13-15 September 2017, co-located with CLEF 2017. The workshop brought together task participants to present and discuss their findings, and prepare for future work. A list of the tasks offered in MediaEval 2017 is below. Workshop materials and media are available online (see also the individual links below):

- MediaEval 2017 Working Notes Proceedings: http://ceur-ws.org/Vol-1984

- Overview of MediaEval 2017: Presentation video including summaries of all tasks.

- MediaEval 2017 Program: Full Version and Overview Version

- Slides of presentations given at the workshop and the task overview posters: http://www.slideshare.net/multimediaeval

- Presentation videos: MediaEval Community YouTube Channel

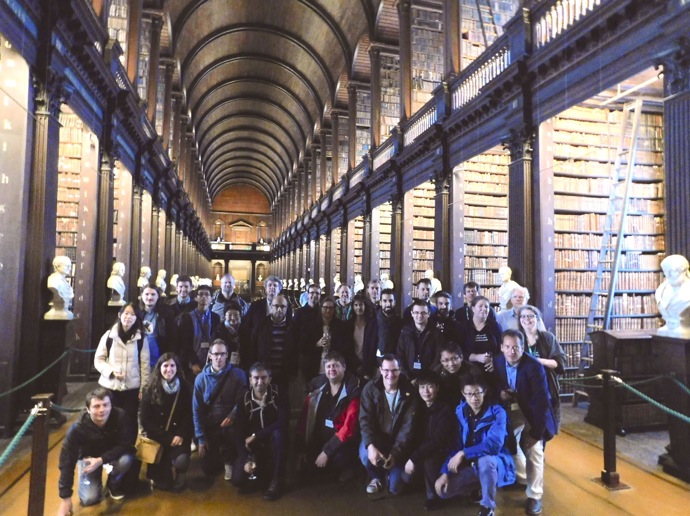

- For pictures, check out MediaEval on Flickr

Cite the MediaEval 2017 Proceedings as:

Guillaume Gravier, Benjamin Bischke, Claire-Hélène Demarty, Maia Zaharieva, Michael Riegler, Emmanuel Dellandrea, Dmitry Bogdanov, Richard Sutcliffe, Gareth J.F. Jones, Martha Larson (eds.) 2017. Working Notes Proceedings of the MediaEval 2017 Workshop, Dublin, Ireland, September 13-15, 2017. http://ceur-ws.org/Vol-1984

MediaEval 2017 Tasks

The results for each task are summarized in the task overview presentation (click on "Slides" and/or "Presentation video")

Retrieving Diverse Social Images Task

Summary of 2017 task results: [Slides] [Presentation video]

This task requires participants to refine a ranked list of Flickr photos retrieved using general purpose, multi-topic queries. Results are evaluated with respect to their relevance to the query and with respect to the degree of visual diversification of the final image set. Read more...

Emotional Impact of Movies Task

Summary of 2017 task results: [Slides] [Presentation video]

In this task, participating teams are expected to elaborate systems designed to predict the emotional impact of movie clips according to two use cases: (1) induced valence and arousal scores, and (2) induced fear. The training data consists of Creative Commons-licensed movies (professional and amateur) together with human annotations valence-arousal ratings. The results on a test set will be evaluated using standard evaluation metrics. Read more...

Predicting Media Interestingness Task

Summary of 2017 task results: [Slides] [Presentation video]

This task requires participants to automatically select frames or portions of movies/videos which are the most interesting for a common viewer. To solve the task, participants can make use of the provided visual, audio and text content. System performance is to be evaluated using standard Mean Average Precision. Read more...

Multimedia Satellite Task

Summary of 2017 task results: [Slides] [Presentation video]

This task requires participants to retrieve and link multimedia content from social media streams (Flickr, Twitter, Wikipedia) of events that can be remotely sensed such as flooding, fires, land clearing, etc. to satellite imagery. The purpose of this task is to augment events captured by satellite images with social media reports in order to provide a more comprehensive view. This year will focus on flooding. The multimedia satellite task is a combination of satellite image processing, social media retrieval and fusion of both modalities. The subtasks will be evaluated with Precision and Recall metrics. Read more...

Medico: Medical Multimedia Task

Summary of 2017 task results: [Slides] [Presentation video]

The goal of the task is to analyse content of medical multimedia data in an efficient way to identify and classify diseases. The data consists of video frames for at least five different disease of the human gastrointestinal tract. The evaluation is based on Precision, Recall and Weighted F1 score, the creation of a useful text report of the findings, and we also will evaluate the amount of training data used. The test set labels are created by medical experts. Read more...

AcousticBrainz Genre Task: Content-based music genre recognition from multiple sources

Summary of 2017 task results: [Slides] [Presentation video]

This task invites participants to predict genre and subgenre of unknown music recordings (songs) given automatically computed features of those recordings. We provide a training set of such audio features taken from the AcousticBrainz database and genre and subgenre labels from four different music metadata websites. The taxonomies that we provide for each website vary in their specificity and breadth. Each source has its own definition for its genre labels meaning that these labels may be different between sources. Participants must train model(s) using this data and then generate predictions for a test set. Participants can choose to consider each set of genre annotations individually or take advantage of combining sources together. Read more...

C@MERATA: Querying Musical Scores with English Noun Phrases Task

Summary of 2017 task results: [Slides] [Presentation video]

The input is a natural language phrase referring to a musical feature (e.g., ‘consecutive fifths’) together with a classical music score and the required output is a list of passages in the score which contain that feature. Scores are in the MusicXML format which can capture most aspects of Western music notation. Evaluation is via versions of Precision and Recall relative to a Gold Standard produced by the organisers. Read more...

MediaEval 2017 Timeline

3 December 2016: Task proposals due

February-March 2017: MediaEval survey

Mid-February 2017: Tasks are announced

April-May: Training data release

May-June: Test data release

June-Mid-August: Work on algorithms

Mid-August: Submit runs

Late August: Working Note paper due

Early September: Camera Ready Working Notes papers due

13-15 September MediaEval 2017 Workshop in Dublin

MediaEval 2017 Workshop

The MediaEval 2017 Workshop was held 13-15 September 2017 at Trinity College Dublin in Dublin, Ireland, co-located with CLEF 2017 and featured interesting joint sessions and cross-over discussions.

Did you know?

Over its lifetime, MediaEval teamwork and collaboration has given rise to over 600 papers in the MediaEval workshop proceedings, but also at conferences and in journals. Check out the MediaEval bibliography.

General Information about MediaEval

MediaEval was founded in 2008 as a track called "VideoCLEF" within the CLEF benchmark campaign. In 2010, it became an independent benchmark and in 2012 it ran for the first time as a fully "bottom-up benchmark", meaning that it is organized for the community, by the community, independently of a "parent" project or organization. The MediaEval benchmarking season culminates with the MediaEval workshop. Participants come together at the workshop to present and discuss their results, build collaborations, and develop future task editions or entirely new tasks. MediaEval co-located itself with ACM Multimedia conferences in 2010, 2013, and 2016, and with the European Conference on Computer Vision in 2012. It was an official satellite event of Interspeech conferences in 2011 and 2015. Past working notes proceedings of the workshop include:

MediaEval 2012: http://ceur-ws.org/Vol-807

MediaEval 2013: http://ceur-ws.org/Vol-1043

MediaEval 2014: http://ceur-ws.org/Vol-1263

MediaEval 2015: http://ceur-ws.org/Vol-1436

MediaEval 2017 Community Council

Guillaume Gravier (IRISA, France)

Gareth Jones (Dublin City University, Ireland)

Bogdan Ionescu (Politehnica of Bucharest, Romania)

Martha Larson (Delft University of Technology, Netherlands)

Mohammad Soleymani (University of Geneva, Switzerland)

Bart Thomee (YouTube, US)

Acknowledgments

Key contributors to 2017 organization

Saskia Peters (Delft University of Technology, Netherlands)

Bogdan Boteanu (University Politehnica of Bucharest, Romania)

Gabi Constantin (University Politehnica of Bucharest, Romania)

Andrew Demetriou (Delft University of Technology, Netherlands)

Richard Sutcliffe (University of Essex, UK)

Thank you to Trinity College Dublin and especially to the Library.

Sponsors and Supporters:

Congratulations to our AAAC Travel Grant Recipients Gabi Constantin (Politechnico Bucharest, Romania) and Ricardo Manhães Savii (Federal University of São Paulo, Brazil), who participated in the MediaEval 2017 Predicting Media Interestingness Task and received travel grants from the Association for the Advancement of Affective Computing (AAAC). MediaEval would like to thank AAAC for its generosity.

We are also very grateful to Simula for their support in the form of a travel grant for the MediaEval 2017 2017 Multimedia for Medicine Task (Medico)

Through the years, MediaEval has also benefited from the support of ACM SIGIR. Once again this year, they have provided us with funding that makes it possible to support students and early career to travel to attend the workshop. We would like to extend a special thanks to them for their essential support.

Technical Committee TC12 "Multimedia and Visual Information Systems"

of the International Association of Pattern Recognition

For information on how to become a sponsor or supporter of MediaEval 2017, please contact Martha Larson m (dot) a (dot) larson (at) tudelft.nl